Moving data across systems has never been easy, as multiple data sources, custom pipelines, inconsistent governance, and a mix of batch and real-time demands all converge to create bottlenecks. For many organizations, integrating data from disparate sources like enterprise databases, SaaS platforms, and third-party systems still involves outdated tools, heavy operational overhead, and fragmented visibility.

Lakeflow turns that around. Built into the Databricks Lakehouse Platform, Lakeflow reimagines data integration as a fully managed, unified experience that simplifies every stage of the process through:

- Lakeflow Connect for streamlined ingestion from a wide range of sources

- Lakeflow Pipelines for flexible and scalable transformation across workloads

- Lakeflow Jobs for end-to-end orchestration with built-in reliability

As organizations increasingly prioritize real-time insights and governed, scalable data operations, LakeFlow Connect emerges as the cornerstone of modern ingestion strategies. Whether you’re centralizing siloed data across business units or enabling real-time reporting from operational systems, Lakeflow Connect plays a critical role in making it seamless. It serves as the ingestion framework within the Lakeflow suite, designed to simplify data collection from diverse sources across both batch and streaming workloads.

Continue Reading

Moving beyond traditional ETL approaches

Traditional ETL processing often involves delayed batch cycles, siloed source connectivity, and fragmented orchestration tools. These workflows are not only complex to manage but also create lags that hinder timely insights. Even modern tools like Delta Live Tables, though useful, have limitations when it comes to handling real-time ingestion across diverse enterprise systems.

LakeFlow Connect breaks through these constraints by offering a fully managed, connector-first ingestion solution.

How LakeFlow Connect works

LakeFlow Connect is a purpose-built data ingestion solution that simplifies and automates the movement of data from various enterprise systems into the Lakehouse. It operates as an integrated component of the Databricks platform, built to handle complex ingestion demands with robust governance and scalable performance.

Here’s how LakeFlow Connect delivers secure data ingestion by leveraging managed connectors, real-time change data capture, and integration with governance and security frameworks:

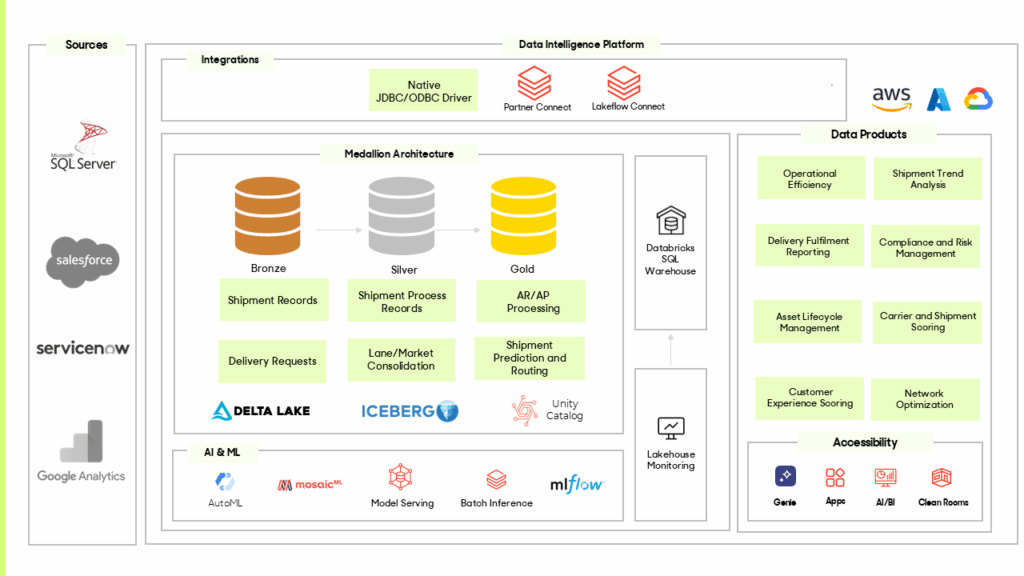

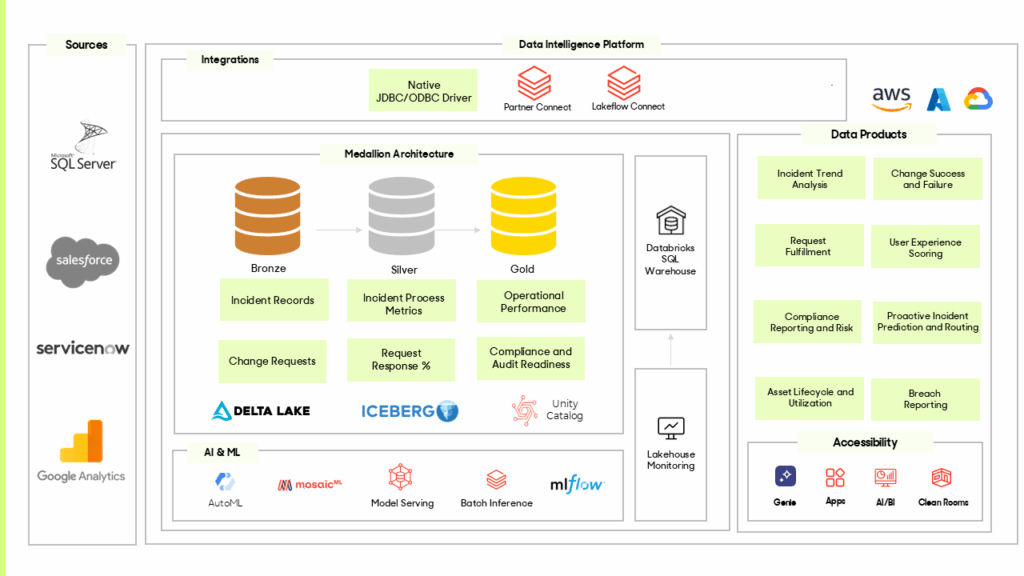

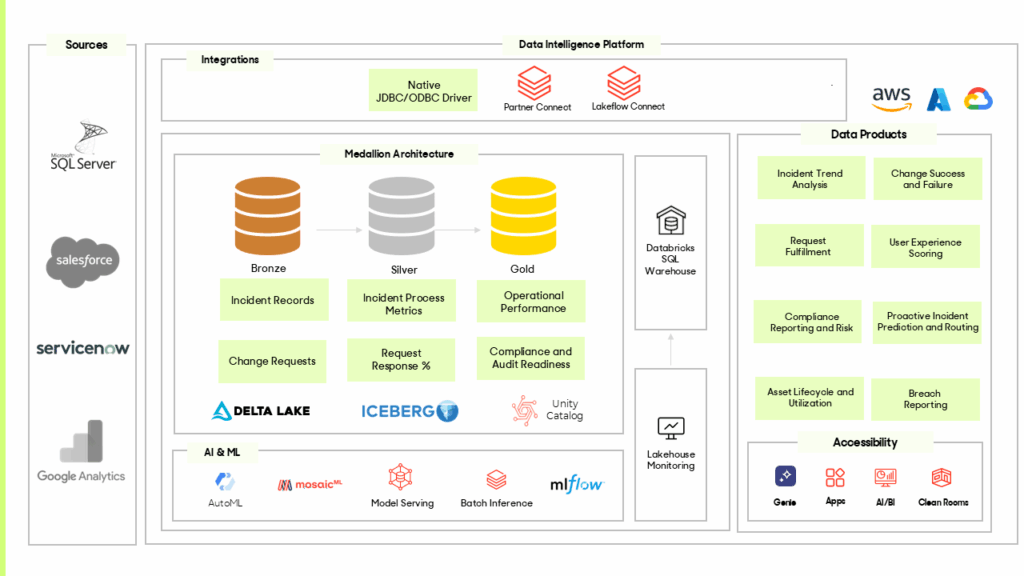

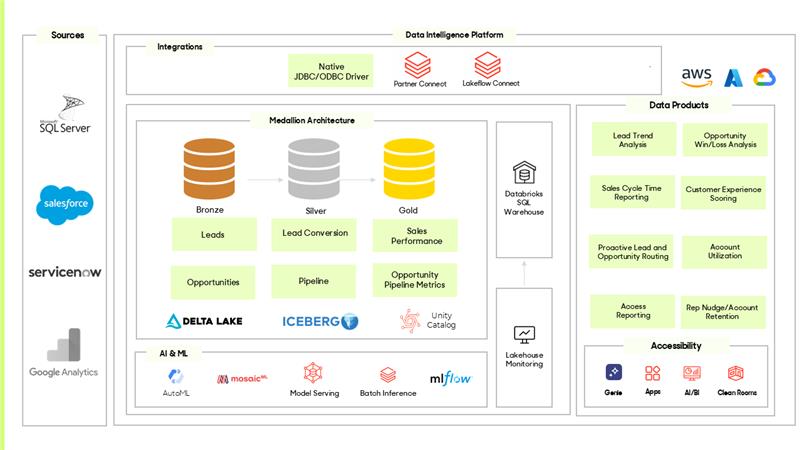

1. Prebuilt, Managed Connectors

LakeFlow Connect offers a growing set of prebuilt connectors that cover a broad spectrum of data sources, ranging from relational databases and SaaS platforms to cloud object stores and on-premise file systems. Today they have connectors available for SQL Server, Salesforce, ServiceNow, and Google Analytics. These connectors are fully managed, minimizing manual overhead and removing the need to write and manage custom ingestion logic. Each connector supports multiple ingestion modes, including full loads and incremental updates, enabling flexibility in how data is moved based on workload needs.

2. Change Data Capture (CDC)

A standout feature of LakeFlow Connect is its robust support for Change Data Capture (CDC). Powered by the underlying technology acquired through Databricks’ integration of Arcion, it captures incremental changes from source systems and applies them efficiently to the Lakehouse. This enables near real-time ingestion workflows that keep data fresh and synchronized without requiring full data reloads, thus optimizing resource usage and minimizing latency.

3. Low-Code/No-Code Interface

With a visual, intuitive interface, LakeFlow Connect allows users to set up ingestion workflows without writing code. Through guided configurations, users can define source connections, set load frequency, handle schema mapping, and enable CDC, all from a unified control panel. This accessibility ensures that ingestion becomes a collaborative effort between technical and non-technical users alike.

4. Seamless Integration with Databricks Unity Catalog

From the moment data is ingested, LakeFlow Connect ensures that governance and security controls are enforced consistently. It integrates with Unity Catalog, Databricks’ centralized governance framework, providing fine-grained access controls, role-based permissions, comprehensive auditing, and detailed data lineage tracking. This native integration ensures that ingestion aligns with enterprise-level compliance mandates without additional tooling.

5. Scalability and Reliability Through Managed Infrastructure

Built on the scalable infrastructure of Databricks Lakehouse Platform, LakeFlow Connect can handle large-scale ingestion workloads with ease. Its architecture supports horizontal scaling to ingest high volumes of data and offers built-in reliability features such as automatic retries, error handling, and alerting. This ensures that ingestion pipelines remain robust and resilient in production environments.

6. End-to-End Visibility and Monitoring

Operational transparency is a core focus of LakeFlow Connect. It offers built-in dashboards that track pipeline performance, ingestion lag, error metrics, and data freshness in real time. These observability tools allow teams to proactively manage data workflows, quickly address failures, and maintain SLAs on ingestion performance.

7. Complementary Role Within the LakeFlow Ecosystem

While LakeFlow Connect specializes in ingestion, it is designed to work seamlessly with other components of the LakeFlow suite, namely LakeFlow Pipelines for data transformation and LakeFlow Jobs for orchestration. This modular approach allows organizations to build fully managed, end-to-end data workflows, with LakeFlow Connect ensuring reliable, governed data onboarding into the Lakehouse as the foundational step.

Together, these powerful capabilities enable enterprises to master complex data flows with end-to-end control.

By centralizing and simplifying ingestion, LakeFlow Connect becomes a strategic asset for enterprise-scale analytics and AI readiness.

What makes it stand out

Lakeflow Connect isn’t just another data integration tool. Its design is fundamentally aligned with the modern data stack and Lakehouse architecture. Some of the key features that set it apart include:

- Built-in CDC Support: Capture and apply incremental changes from source systems with ease, enabling near real-time ingestion into your Lakehouse.

- Default Connectors: Out-of-the-box connectivity for popular systems reduces engineering overhead and accelerates time to value.

- Enhanced Data Quality Checks: Automatically validate and ensure data integrity throughout the ingestion process to maintain trusted datasets.

- Integrated Orchestration: Seamlessly coordinate ingestion workflows with native job scheduling and dependency management for reliable pipeline execution.

- Simple UI: Ingest and configure data sources through a low-code/no-code visual interface, minimizing reliance on custom coding.

- Monitoring Made Easy: Comes with out-of-the-box monitoring dashboards that provide visibility into pipeline performance, failures, and data flow in real time.

These capabilities empower teams to move beyond fragmented ingestion solutions and towards a future-ready approach.

Real-world use cases and business value

Lakeflow Connect is already showing tangible value across industries. From integrating operational databases for real-time business dashboards to consolidating enterprise SaaS application data for analytics and forecasting, the benefits are wide-ranging:

Efficiency and Speed

- Reduced engineering effort through plug-and-play, managed connectors.

- Faster pipeline setup and management enabled by a low-code/no-code interface.

- Increased operational efficiency by centralizing ingestion within a single, governed platform.

Data Quality and Governance

- Consistent enforcement of governance throughout the data ingestion lifecycle.

- Improved data trust with Unity Catalog integration, access controls, and built-in quality checks.

Real-Time and Analytics Readiness

- Real-time data readiness with native CDC and streaming ingestion capabilities.

- Enhanced freshness and availability of data for analytics and AI applications.

- Accelerated time-to-insight by reducing latency between source data and analytics models.

Final thoughts

LakeFlow Connect reimagines how organizations bring data into the Lakehouse without the complexity of traditional integration methods. With built-in change data capture, native support for both batch and streaming workloads, and a wide range of prebuilt connectors, it removes the common challenges associated with sourcing data from various systems and enterprise applications. This creates a centralized, governed, and scalable approach to managing data that supports agility and long-term growth.

As a trusted Databricks partner, zeb works closely with enterprises to implement LakeFlow Connect in ways that align with their architecture and strategic goals. From simplifying integration across environments to enabling secure and real-time data movement, we help organizations transform fragmented data into a unified and reliable foundation.

Ready to streamline your data ingestion strategy? Let Databricks LakeFlow Connect, combined with zeb’s expertise take you there.