“59% of the businesses today are using data analytics in different ways to make better decisions and increase the performance of the businesses” – Forbes

Business data continues to be one of the most valuable assets for enterprises today. The continuous data explosion necessitates efficient storage and optimization, making data refinement a crucial aspect. However, with the ever-growing volume of data, businesses find it challenging to manage and stay competitive in the industry.

As a result of the exponential data growth, businesses have started to desire well-designed cloud-based Data platform that serves as the basis of your data analytics, supporting scalable storage, data processing, and machine learning while optimizing the costs.

Continue Reading

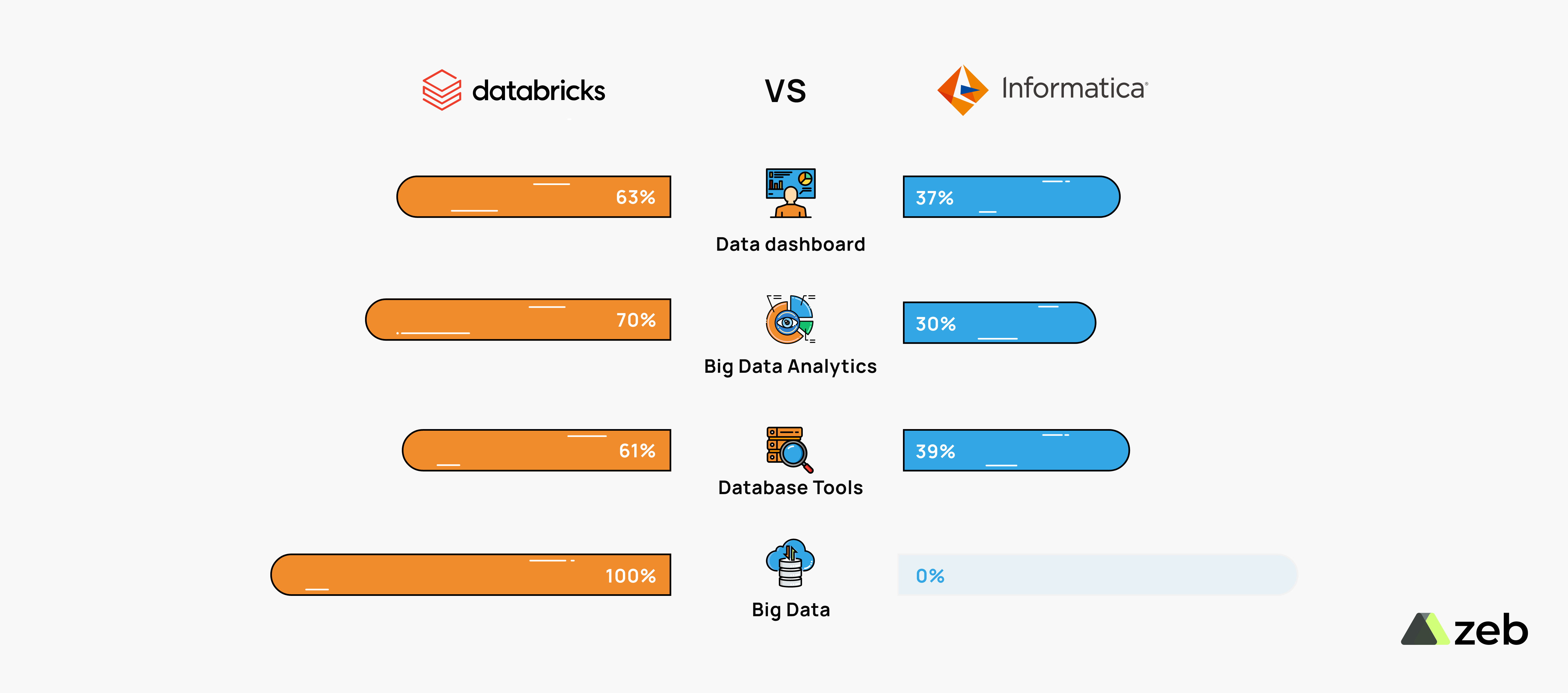

Managing all forms of data is significantly important for businesses. Preparing and curating the data for advanced ML and AI use cases is even more crucial now. Currently, Informatica PowerCenter doesn’t support these features and does not make the cut for modern data integration & data management scenarios. Hence, organizations are moving to Databricks to leverage its advanced features and meet present-day cloud data requirements.

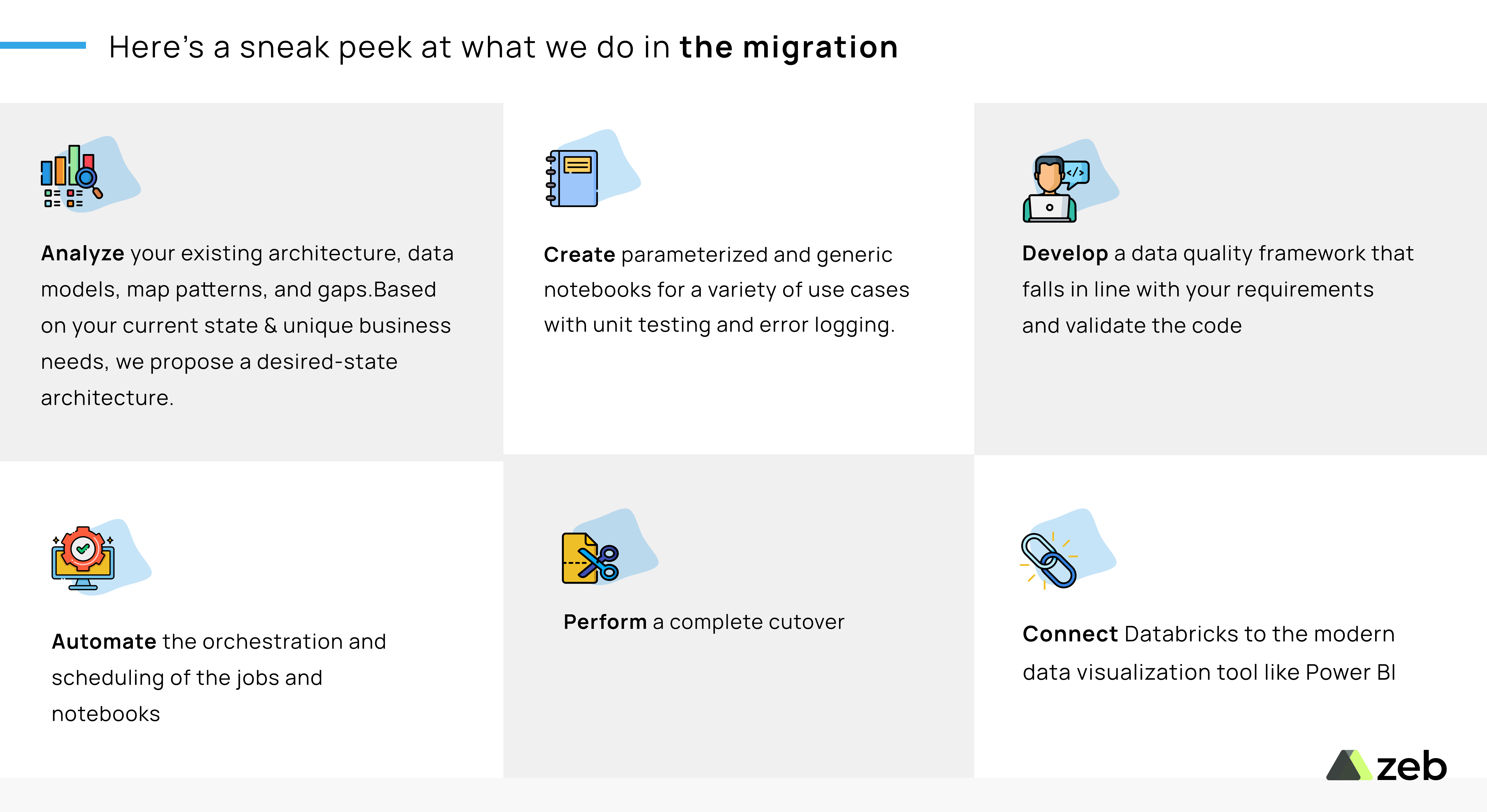

Planning meticulously for migrating the data from Informatica to Databricks is crucial. We thoroughly assess & analyze the platform’s architecture and the database with different formats of data, integrations, and endpoints. Based on the analysis, our team of data engineering experts will develop a strategic migration roadmap for your business needs. Along with this, we help in driving better solutions by adding immense value through error logging, data reconciliation, and data quality framework.

Before exploring what magic Databricks can do for your business, let us first understand the limitations of Informatica.

Here are the challenges faced when it comes to the Informatica Power Center

Licensing Constraints

For small and medium-sized businesses, it is not feasible to shell out huge license costs. Informatica is expensive, and the licensing complexity makes it even more difficult for enterprises to continue using it.

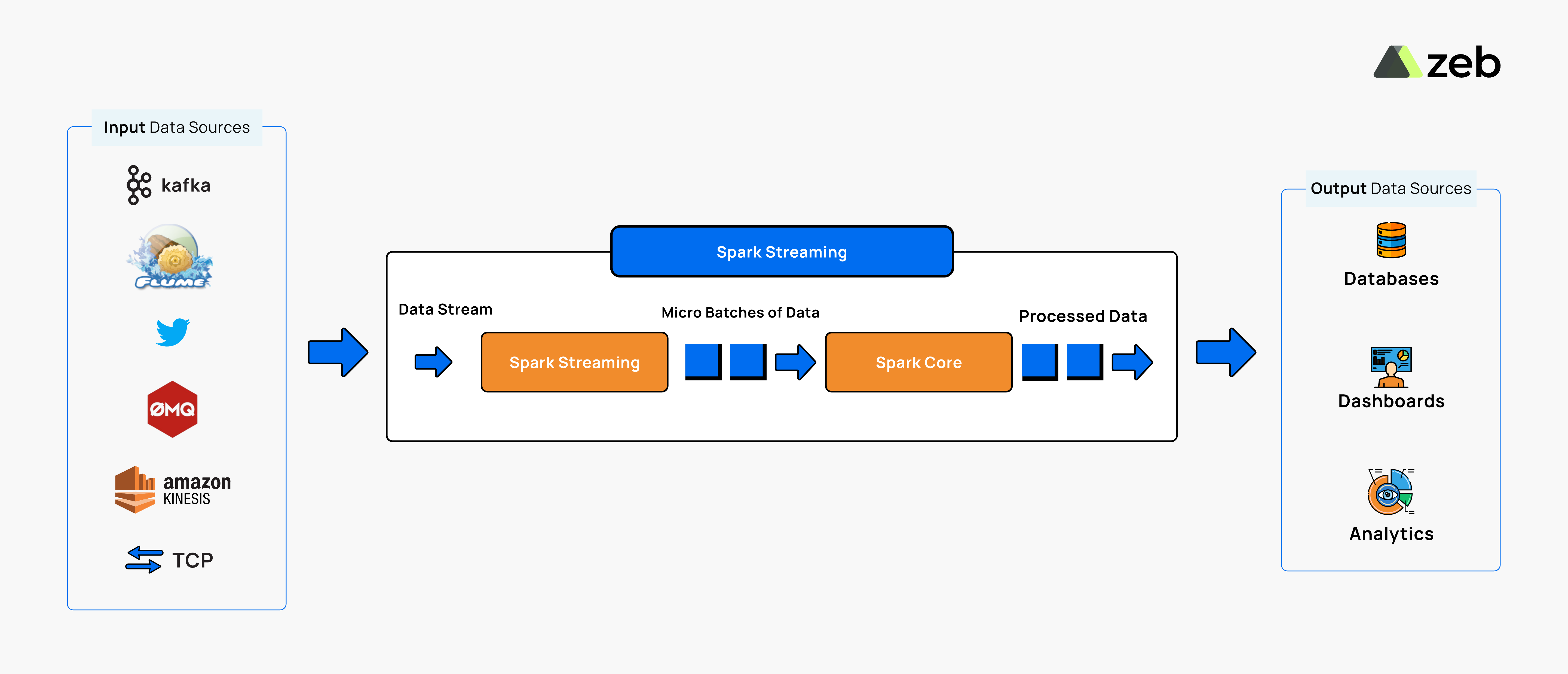

Limited or no streaming capabilities

Informatica supports streaming with other services like Kafka involved in it, without which it is not possible to have native streaming capabilities. You can only do the batch process and it does not support real-time data streaming. Real-time mass data processing and ingestion require big data infrastructure and it is completely out of scope in the Informatica power center.

High maintenance

Since it’s hosted on-prem, Informatica requires continuous monitoring and ongoing infrastructure maintenance along with the administration of the resources without which it is difficult to run the platform.

Limited Cloud integration capabilities

Informatica PowerCenter does not support and is not optimal for advanced cloud applications such as SAP, Salesforce, and Dynamics 365. It has limited web services support and API management comparatively. You cannot build a cloud-based data warehouse or data lake and all your projects demands upgrades frequently.

Complex UI

The UI of the application is complicated and for a new Informatica user, it is very difficult to navigate and get familiar with the options and functionalities. Gathering the requirements and adding the components to the layout isn’t as simple as it seems.

Low compatibility with common languages

You can only use SQL in Informatica to transform the source data. But you cannot write custom codes and expressions using other languages like Python, Java, or C#. You have to rely on the ETL components that are available on the platform.

Absence of native connectivity

You cannot connect the saas apps and platform natively to Informatica as there are no native connectors for the most used third-party applications like Google Analytics, Salesforce, Stripe, Google Ad manager, etc.

Overhead resource consumption

Due to the manual configuration of clusters, it takes more time to provision and auto-scale. Hence, most of the time is spent on troubleshooting, resource management, and infrastructure management to retain the end-to-end pipeline without any disruptions. This leads to wasted resources and escalation of redundant costs.

Scalability shortfall

With Informatica, organizations have low scope for scaling when they have massive data to be processed. It is for the obvious reason that the Informatica platform is On-prem and cannot support big data processing.

Lack of advanced ML & AI capabilities

Informatica does not have an integrated end-to-end ML & AI environment. It fails to provide an advanced AI & ML ecosystem for its users, putting your data engineer and data scientists into trouble. With this, cross-team collaboration for advanced model training, experiment tracking, and feature development becomes impractical and unfeasible.

So how does Databricks extend beyond the Informatica features and fill the gap?

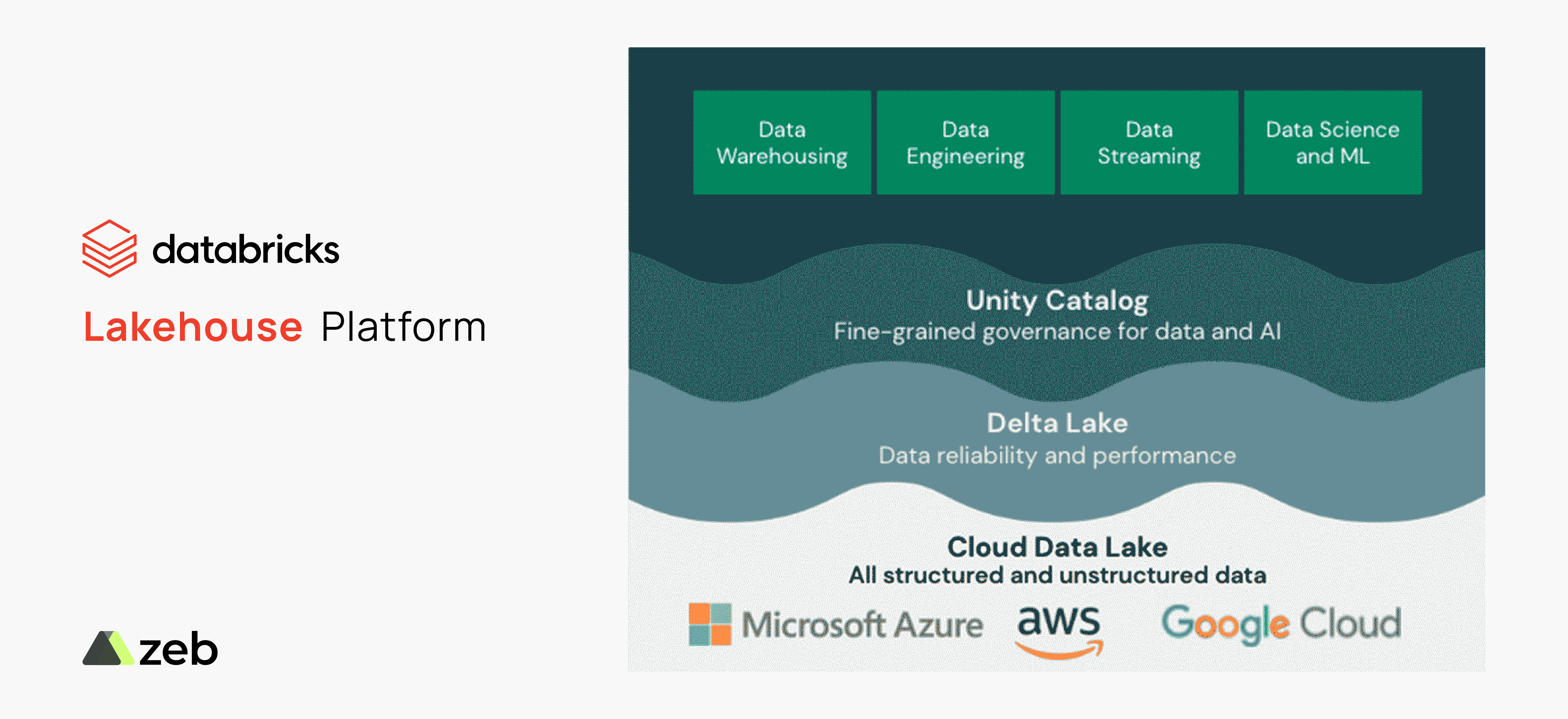

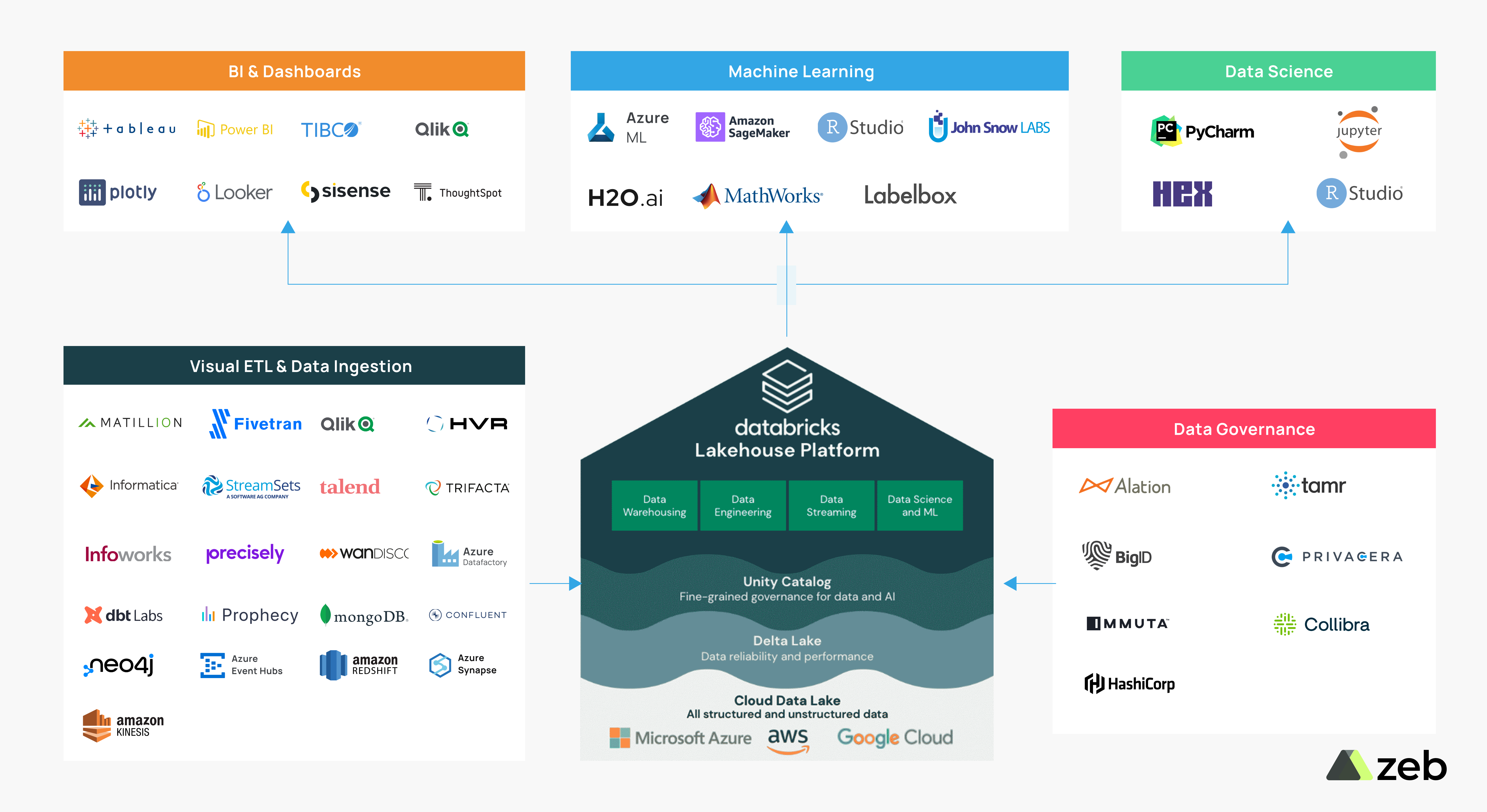

Databricks is an all-in-one and unified platform for integration, storage, and data warehousing. It is a collaborative platform for data engineers and data scientists to perform ETL tasks and build ML models using the same platform.

With Databricks, you can focus on solving the real business challenges, move ahead in the competitive curve and let the platform perform the rest of the data tasks.

Let us explore more about why you should migrate to Databricks Today

Cloud agnostic leader

Databricks is a cloud-agnostic platform with unified data lakehouses, for enterprise data management & Visualization. We will help you leverage multiple cloud capabilities through a unified data platform while leveraging the existing data lake on the cloud storage of your choice.

Automatic cluster allocation

Databricks provides best-in-class performance for the optimization of clusters, by automatically allocating the cluster resources based on the workload volume without any impact on your pipelines. We will configure the all-purpose cluster and job clusters to analyze the data collaboratively and create robust automated jobs based on your business needs.

Flexibility to use multiple languages

The Databricks notebook interface allows the use of multiple languages to code in the same notebook. Spark SQL, Java, R, Python, and Scala are some of the languages supported by Databricks to code using the ‘magic commands’. For instance, you can perform data transformation using SQL, predict using Spark’s MLib, evaluate the model’s performance in Python and visualize the same in R.

Big data processing and streaming

As Databricks is built on top of Apache Spark, real-time data processing and analytics can be done using the same platform. As it is natively managed by Databricks, we will help you with the computing of data in real-time as and when the data arrives and not in batches.

Manage Machine Learning workflows and MLOps natively

Databricks has a managed ML flow for experimentation, deployments, and management. Using the ML capabilities from the same platform, we will help you process massive data of your organization and derive intelligent insights that will immensely help your organization.

Easy navigation and simple UI

Databricks has a very easy-to-use graphical data interface for the workspace folders and the data objects in it. You can develop notebooks and run the cells without any extra support or guidance as the actions can be performed using shortcuts and magic commands.

Benefits of native connectors

With Databricks, we will help you build an end-to-end data lifecycle in a few clicks and expand the capabilities of your lakehouse rapidly with third-party applications. We will also help you to connect seamlessly and work in your data transformation journey phased out from data ingestion, data pipeline building, data governance, data science, ML, and BI dashboards all by using the same platform with native connectors.

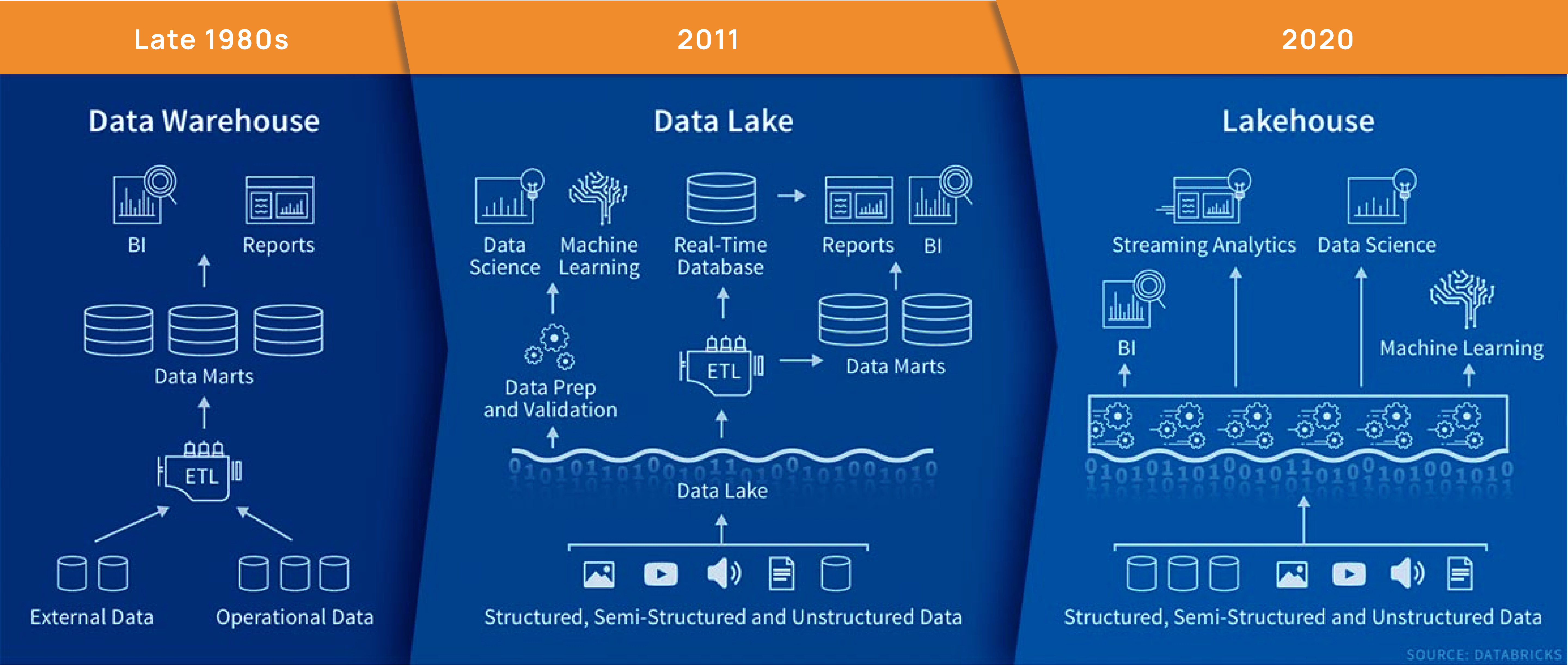

Easy storage with Databricks lakehouse

With Datalakes or Lakehouse, storing all types of data is possible as it is based on open-standard formats and it is way cheaper too. This means you will have no bottlenecks between your data lake and the external sources. We will help you add new sources of data without maintaining multiple copies of the data sets. Extracting data from the lakes to share it with other locations is made simpler with Databricks.

Low maintenance and pay-as-you-use model

The flexibility to pay for what you use is very feasible for organizations of all sizes. Databricks has a low maintenance cost as the platform is on the cloud. Your overall cost of architecture maintenance, infrastructure costs, automated cluster allocation, and spark management dramatically decreases the costs.

Your next steps

Deciding to migrate to a new platform isn’t an easy decision. But if you wish to

- Do more with your data

- Stay competent by leveraging the cloud capabilities and being modern

- Cut down your legacy system licensing cost

- Adapt latest technologies

- Derive informative insights using data analytics and AI

- Reduce the infrastructure maintenance cost

- Achieve your business goals

Then you should definitely consider migrating from the Informatica Power Center platform to Databricks today.

Being an industry leader and a Databricks partner, we can guide you throughout the migration journey and provide the support needed to achieve a data-driven business model.

To learn more about our process, refer to our Informatica Power Center to Databricks Migration Guide.

Struggling with the migration and do not know where to begin from? With our data science experts, evaluate your current architecture, review the challenges, and validate a feasible and sustainable solution for migration.