Enterprises in the consumer, media, entertainment, and gaming (CMEG) industries face constant pressure to deliver personalized experiences and insights to their audiences in real time. From streaming platforms and game studios to marketing agencies and content providers, data-driven engagement isn’t just an advantage it’s essential for customer retention and loyalty. Timely access to the right data can mean the difference between capturing an audience’s attention and missing the moment entirely.

Real-time data delivery enables these organizations to continuously unify data from diverse sources customer interactions, content consumption patterns, social engagement signals, in-app behaviors, and ad performance metrics—into a single, trusted Customer 360 view. With this unified perspective, teams can personalize experiences dynamically, optimize campaign performance as it happens, and respond instantly to audience trends or player behavior.

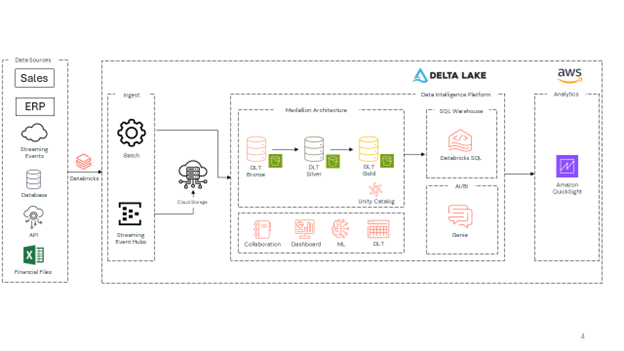

Platforms like Databricks make this possible by connecting streaming, marketing, and transactional datasets across silos into a governed Lakehouse foundation. This unified approach powers use cases such as real-time content recommendations, dynamic offer targeting, churn prediction, and monetization optimization. Ultimately, a complete and current view of every customer or player enables media and entertainment brands to deliver the right message, through the right channel, at the right moment—driving deeper engagement, loyalty, and lifetime value.

Continue Reading

A core enabler of this transformation is the ability to build a unified Customer 360 view. For CMEG organizations, this means breaking down the silos between streaming platforms, ad networks, social channels, gaming telemetry, and CRM systems to bring every interaction into one cohesive data model. With this holistic view, brands can understand each customer’s preferences, engagement history, and intent in real time—unlocking hyper-personalized recommendations, adaptive content delivery, and seamless cross-channel experiences. Databricks empowers this unification by integrating structured and unstructured data at scale, providing a real-time foundation for analytics, machine learning, and campaign optimization. The result is not just richer insights, but the ability to anticipate audience needs and act instantly—creating deeper loyalty, higher engagement, and measurable growth across every touchpoint.

Streaming capabilities in Databricks

Databricks is the premier platform for streaming and real-time data delivery capabilities. With the same codebase supporting both Batch and Streaming workloads, a sink (essentially a “write” of the data) can be done from both a Streaming source or a Batch source.

Databricks has two services tailor-made to deliver data at a real-time/near real-time basis

- Structured Streaming

- Delta Live Tables

Structured Streaming

Structured Streaming is a key service that powers data streaming on Databricks, providing a unified API for batch and stream processing https://www.databricks.com/product/data-streaming with the same codebase. It enables continuous data processing, allowing organizations to respond to incoming data immediately https://www.integrate.io/blog/the-only-guide-you-need-to-set-up-databricks-etl/ from a cloud storage bucket. Key features include:

- Unified batch and streaming APIs in SQL and Python

- Automatic scaling and fault tolerance

- Seamless integration with Delta Lake for optimized storage

Delta Live Tables

Delta Live Tables (DLT) offers a declarative approach to data engineering, simplifying the creation and management of streaming data pipelines. Benefits include:

- Automated data quality checks and error handling

- Simplified ETL processes for both batch and streaming data

- Reduced time between raw data ingestion and cleaned data availability

- Support for Streaming Pipelines with SQL Queries

- Ability to “sink” into multiple sources at once

Implementing real-time solutions with Databricks

To achieve the goal of reaching customers in 8 seconds or less, consider the following implementation strategies:

Optimized Data Ingestion

Use Auto Loader for efficient, real-time data ingestion from cloud object storage. This feature automatically detects schemas and optimizes the ingestion process, reducing latency in data processing.

Streamlined Processing

Leverage Databricks’ enhanced autoscaling capabilities to optimize cluster utilization by automatically allocating compute resources for each unique workload ensuring efficient processing of real-time data streams.

Unified Governance

Implement Unity Catalog for integrated governance across all data and AI assets, ensuring secure and compliant real-time data processing.

Best practices for real-time data delivery

- Configure Auto-Scaling Clusters: Set up auto-scaling clusters with appropriate instance types to balance resource utilization and cost-effectiveness.

- Implement Robust Error Handling: Establish automated systems for monitoring and handling errors to maintain reliable real-time ETL workflows.

- Utilize Delta Lake: Leverage Delta Lake for optimized storage and processing of both streaming and batch data

- Apply Real-Time Data Quality Checks: Use Delta Live Tables to perform real-time data quality checks and ensure data integrity.

- Monitor Performance: Regularly monitor pipeline performance using Databricks’ built-in tools and UI for immediate visibility into stream processing efficiency

Final thoughts

Delivering a real-time Customer 360 view is no longer optional for consumer, media, entertainment, and gaming organizations—it’s the foundation for lasting audience connection and growth. In a world where attention spans are measured in seconds, brands that can unify every customer interaction into a single, trusted view gain the agility to personalize experiences instantly, optimize monetization strategies, and deepen engagement across every channel. Databricks makes this possible through its powerful Lakehouse architecture, seamlessly integrating streaming, marketing, and behavioral data with unified governance and machine learning–ready analytics. With this foundation, CMEG leaders can move beyond reactive reporting to proactive engagement—anticipating what their audiences want next and delivering it in real time